In recent years, artificial intelligence has made astonishing progress, especially with large language models like ChatGPT. These powerful systems can understand and generate human-like text, answer questions, write essays, even pass exams. But behind this success lies an inconvenient truth: these models are extremely hungry for computing power, electricity, and specialized hardware—mostly made by a few companies in a few countries.

The AI world today is built on a model architecture called the Transformer, which has dominated the field since 2017. While it’s powerful, it’s also inefficient—especially when working with long documents. It requires enormous computational resources to keep track of how every word in a sentence relates to every other word, a process that becomes slower and more expensive the longer the text gets. Training and running these models typically depends on NVIDIA GPUs, which are expensive and largely out of reach for countries that want to build AI systems independently.

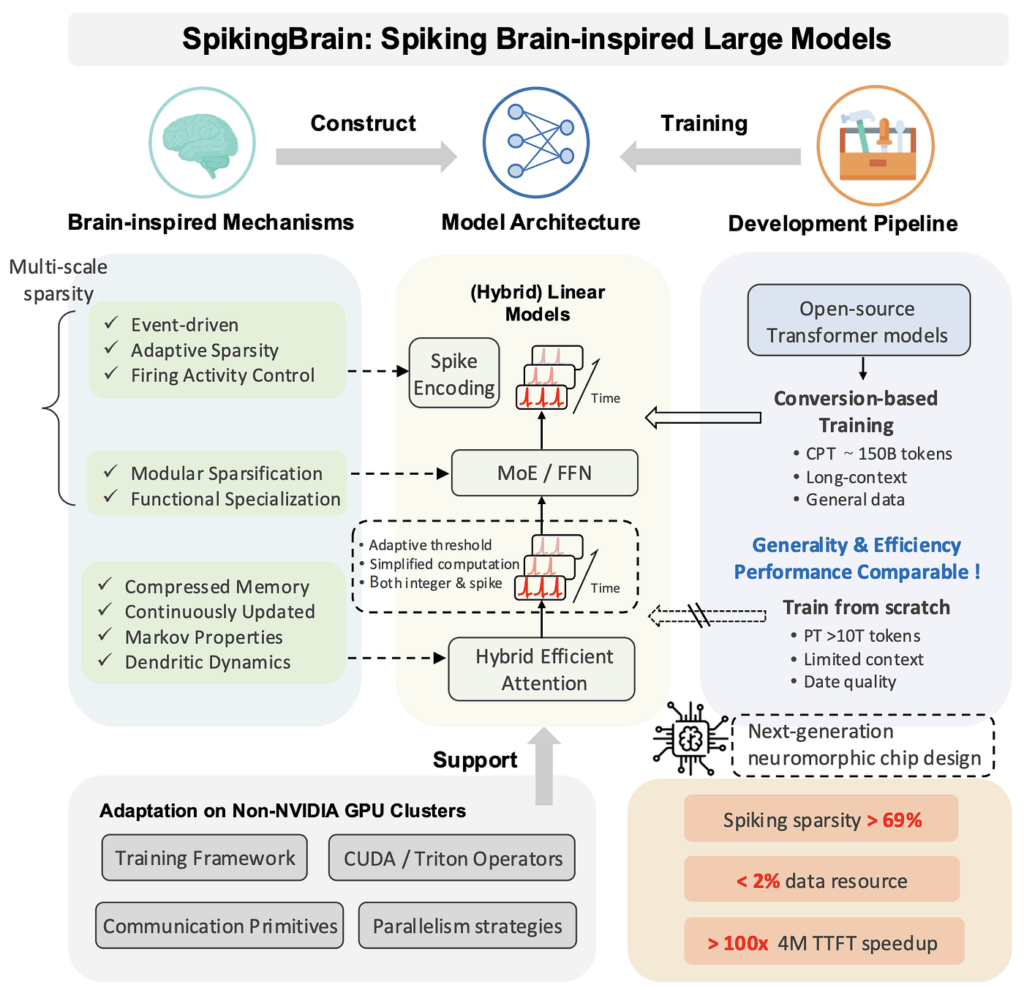

Now, Chinese researchers have introduced something entirely different: a brain-inspired AI model called SpikingBrain 1.0, or Shunxi (瞬悉) in Chinese. Developed by a team led by the Chinese Academy of Sciences, this new model takes inspiration not from computer science, but from biology—specifically, the way the human brain works. And the results are remarkable.

Unlike traditional AI, which is always calculating everything all the time, the human brain works in a very different way. It runs on just 20 watts of power—less than a light bulb—but handles more information than any supercomputer. It does this through billions of neurons only when needed. The brain is what scientists call event-driven: it reacts only when something important happens. This is what SpikingBrain is trying to mimic.

SpikingBrain 1.0 is the world’s first large-scale, fully brain-inspired AI model trained entirely on domestically produced Chinese hardware—a significant achievement in a world where most cutting-edge AI still relies on American-designed chips. But what makes it truly unique is not just where it runs, but how it thinks.

Most AI models process language by comparing every word with every other word to figure out what matters. This is called the “attention mechanism”—it works, but it’s slow, especially for long text. The time and memory needed grow exponentially with longer inputs. SpikingBrain replaces this attention system with something simpler and faster, modeled more closely on the way neurons process information.

Instead of trying to see every word in a document all at once, SpikingBrain breaks it down in a more organized, efficient way. It uses methods like sliding windows, which focus only on nearby words at any given time, and compressed representations, which summarize longer passages. Think of it like a person reading an article, understanding each paragraph before moving on, rather than trying to memorize the whole article in one glance.

Another breakthrough in SpikingBrain is the use of “experts”—smaller specialized modules inside the model that activate only when they’re needed. For example, if the model is answering a legal question, only the “legal expert” gets involved, while the rest of the model stays quiet. This method, called Mixture of Experts, allows SpikingBrain to have a massive number of parameters (the internal settings that allow AI to learn), without using more energy or memory. It’s like having a team of advisors, but only calling on the ones who know the subject.

But the most futuristic aspect of SpikingBrain is the use of spikes—short bursts of digital signals that mimic the firing of neurons. Traditional AI uses continuous values, but the brain doesn’t. It communicates through pulses, or spikes, which only happen when necessary. SpikingBrain uses a similar approach: its artificial neurons function only when the information is strong or important enough to cross a threshold. This makes the model dramatically more efficient. Tests show that up to 70% of its computation can be skipped because nothing needs to happen until a spike is triggered. In theory, this could lead to 43 times more energy efficiency than traditional AI models.

Even more impressively, this model was trained and deployed entirely on MetaX GPUs, China’s own AI chips. While the global AI race has so far been dominated by companies using NVIDIA chips, SpikingBrain proves that a fully self-reliant AI system is possible. The researchers trained two versions of the model—one with 7 billion parameters and another massive one with 76 billion—entirely on MetaX’s computing clusters, running for weeks without interruption. These are not experimental toys—they’re production-ready models.

And how do they perform? Surprisingly well. The smaller 7B model performed on par with global models like LLaMA-2 and Mixtral. It can read and process documents millions of words long—something that would choke or crash most Transformer-based systems. It also runs up to 15 times faster than comparable models on regular CPUs, meaning it could one day power smart assistants, search engines, or scientific tools even on low-power devices like phones or tablets.

But perhaps the most important thing about SpikingBrain is what it represents: a new path for AI development. For years, AI progress has followed a single road—bigger models, more data, more computing power, and more energy use. This “more is better” approach is starting to hit its limits. What SpikingBrain offers is something radically different: a model built on the principles of biology, efficiency, and independence. It’s not just copying the brain for inspiration—it’s rebuilding AI from the inside out.

By focusing on what the brain does best—sparse, efficient, specialized processing—SpikingBrain opens the door to a future where artificial intelligence can be both powerful and sustainable. It also gives China a strong foothold in a new and emerging field of “neuromorphic” AI: machines that don’t just act smart, but actually think in brain-like ways.

This is not just about speed or power. It’s about sovereignty, creativity, and charting a new course. SpikingBrain is still in its early stages, but it may be remembered as the moment when AI research began to look not just at silicon, but at biology—when we stopped trying to force machines to think like machines, and started helping them think more like us.

In a world increasingly shaped by artificial intelligence, that may be the smartest idea of all.

Source: Sohu, Tencent, gopenAI, AITOP100