Releasing a self-driving car is gradually on the timetable of different companies as they bet that artificial intelligence utilized in the self-driving will be inevitable, and they are all trying to gaze the chance with massive investment and high initiatives.

Many engineers claim that algorithms and technologies used in self-driving car are safe enough, no matter how intelligent the car can be it will always follow the program to do the right thing, and there is plenty of conferences in machine learning to discuss how to produce a perfect self-driving car. From the user to the producer, everyone feels more excited than worried about the usage of a self-driving car but the reality reflects the risks more.

This month, a TikTok user @blurrblake shared a video named “When your car is A Better Driver than you”. In the video, four young men were drinking beers and singing “baby” together while the car, Autopilot, was on self-driving mode with increasing speed on the highway in California, later it reached 96km/h. Their video drew the attention of local authorities and later they were accused of breaking California’s open container laws that it is illegal for a person to drive with an alcoholic beverage in the vehicle that has been opened – even if the alcohol is not being consumed, and drinking in a vehicle is forbidden.

This offense can be usually fined $250, and if those four young men are under 21, they will face a misdemeanor, punishable by up to six months in jail and a maximum $1,000 fine. Furthermore, the local authority did not mention the punishments for Tesla at all. Luckily there wasn’t an accident but if they continue to do so one day it might happen. Once it occurs, who will take the responsibility? Will be the car or the passenger?

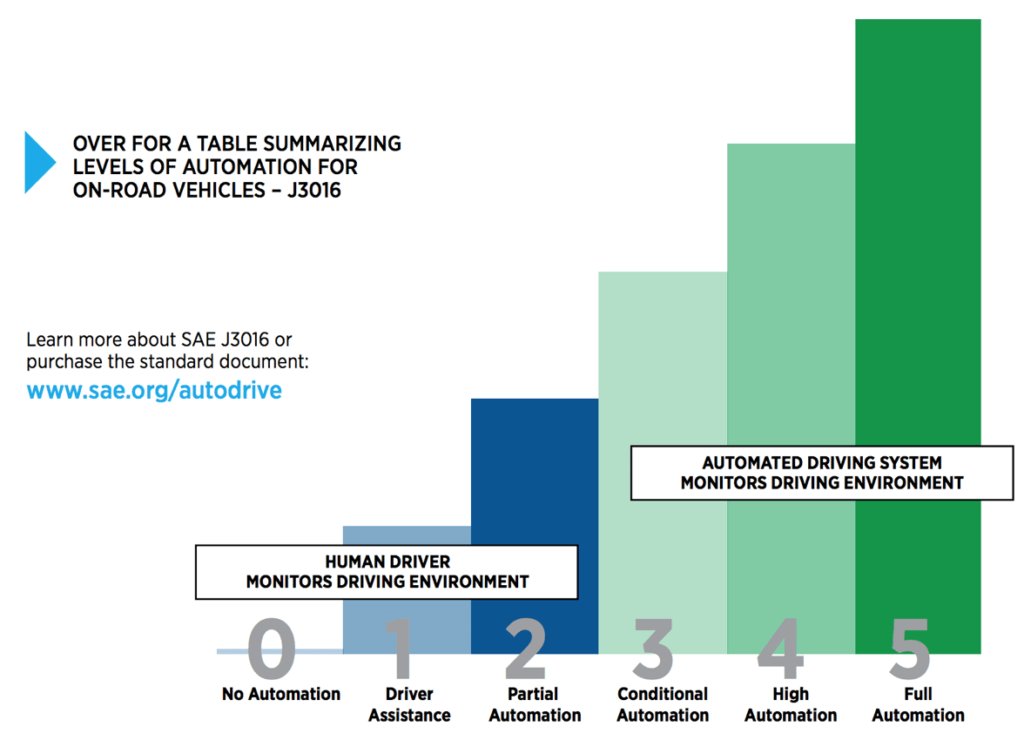

Till now any so-called self-driving cars have not reached a completely automatic level. Even Tesla’s autopilot still needs passengers to take care of the driving process, therefore, companies play the definition of self-driving. “Self-driving” is a very wide and vague term, the International Society of Automotive Engineering (SAE International) defines self-driving with several levels:

- Level 1 automation some small steering or acceleration tasks are performed by the car without human intervention, but everything else is fully under human control.

- Level 2 automation is like advance cruise, the car can automatically take safety actions but human needs to stay alert at the wheel.

- Level 3 automation allows human to use “safety-critical functions” to the car under certain traffic or environmental conditions, which rises dangers as human can pass the major tasks of driving to or from the car itself.

- Level 4 automation is a car that can drive itself almost all the time without human, but might be programmed not to drive in unmapped areas or during severe weather.

- Level 5 automation refers to full automation in all conditions.

Common people are not familiar with this industrial standard and car companies do not want to clarify it neither thereby they can play an understanding of self-driving cars. Last week, the American Automobile Association (AAA) released a report mentioning that how to name the programs and systems of vehicles that affects people’s understanding. Many people think the self-driving car is really automatic without human intervention at all or as less as possible.

Currently, there is no car that can be achieved in level 5, companies are focusing on level 4, all available self-driving cars in the market are more close to level 2 or level 3. Google was the first company to start the self-driving car business, and directly gave up level 3, meaning that human and program control driving at the same time. Many companies also prefer to skip level 3, and directly enter the level 4 and level 5, they put the safety responsibility on the self-driving system.

However, Elon Musk thinks differently, he firmly believes that self-driving can be processed and updated from a lower level to a higher level. To achieve purely automatic needs to imitate the human driving process.

Tesla’s Autopilot is advertised as a highly automated car that keeps drivers centered in the lane, automatically brakes, and maintains driving speeds, but with Autopilot this name, many users think there is no need to pay attention to the driving process, though it is required to put hands on the wheel and eyes on the road while using the car. Social controversies of “Autopilot” this name never stop and Elon Musk, Tesla CEO, insists the name can not extend the meaning, and he did not comment about any accidents of Autopilot while he is focusing on finish basic features of the new car with level 5 within this year.

(Source: Shouse California Law group / Emerj Artificial Intelligence Research / arstechnica / Mashable Middle East / sohu.com)