As another phenomenal application based on artificial intelligence technology after AlphaGo, ChatGPT has overturned public perceptions of chatbots and reawakened human concerns about AI since its launch at the end of last November.

Will large language models like ChatGPT be used to produce false information and hate speech? Who owns the copyright of the content they generate? Who is responsible for its content?

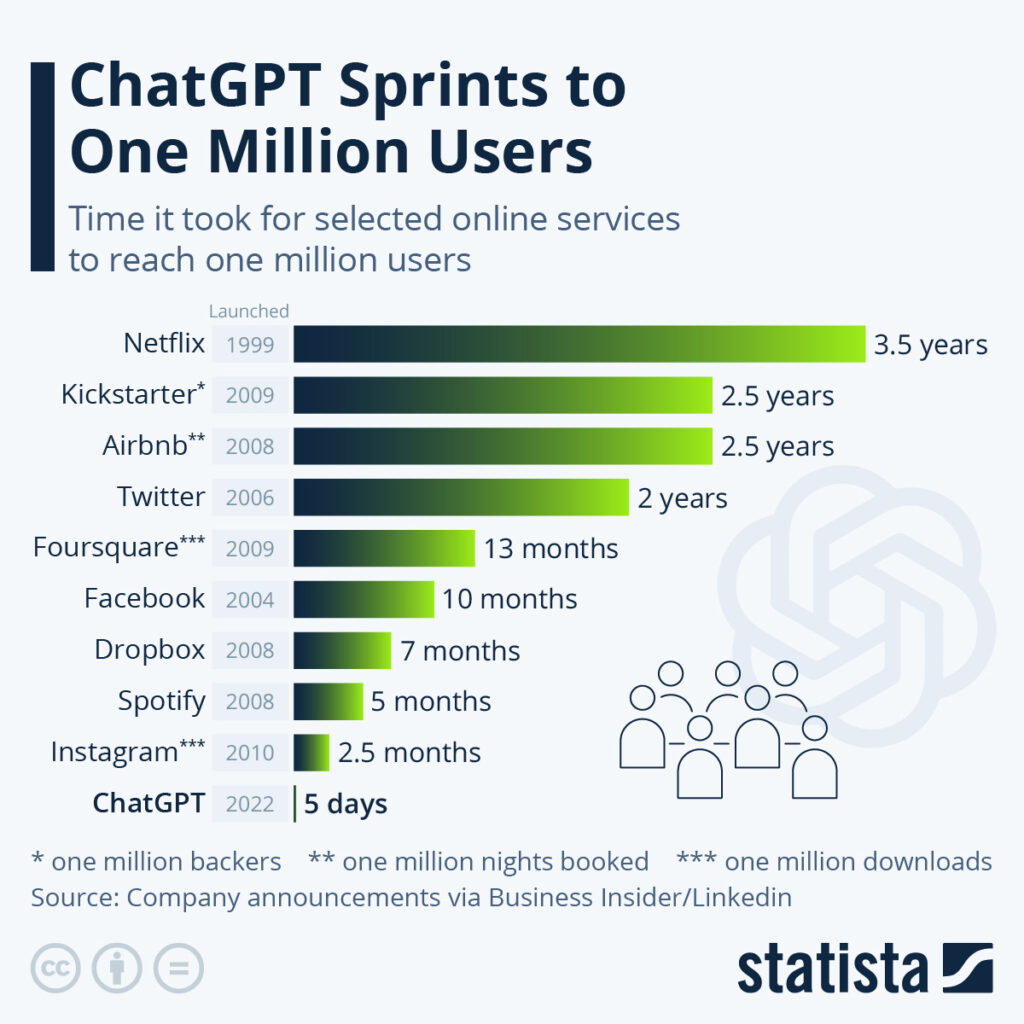

According to UBS data, ChatGPT has reached 100 million monthly active users in just two months since its launch in late November. By comparison, it took TikTok about nine months to reach 100 million users and Instagram more than two years. In the 20 years that the Internet space has been growing, it is hard to imagine any Internet application growing faster than ChatGPT.

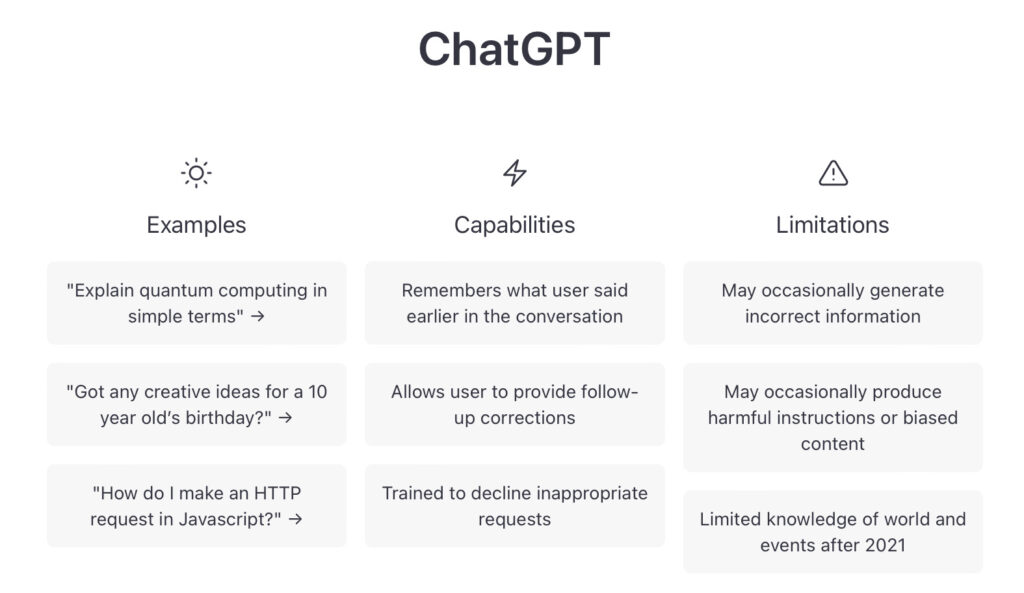

ChatGPT is a Large Language Model (LLM) developed by OpenAI that can generate human-readable language by learning large amounts of text content and linguistic knowledge. For the capital market, the emergence of ChatGPT has raised the upper limit of Natural Language Processing (NLP) capability and directly opened up the ceiling of the market’s imagination on AI topics, thus attracting a high level of market attention both at home and abroad.

From the technical point of view, most AI products do not have substantial innovation but are applications of some existing AI technologies. The emergence of AlphaGo in 2016 set off a round of AI investment climax, but it was just a program for playing Go, with little day-to-day usability, while ChatGPT does not have such limitations, and it can communicate with everyone, which is bound to set off a new round of AI investment boom.

Led by ChatGPT, more and more technology companies are joining this AI race. Microsoft, Google, Meta, and other U.S. technology giants have heavily laid out the relevant track and significantly increased their investment in the field of AI. Although ChatGPT is not yet perfect, it is certainly rebuilding our perception of AI and reawakening human anxiety about AI.

ChatGPT has already created quite a stir in academic and educational circles. Recently, several scientific journals, including Science, Nature, and Oncology, have issued statements rejecting papers that list ChatGPT as a co-author.

ChatGPT has also been banned to varying degrees by educational systems in the United States, Australia, France, and other countries, either by restricting access to ChatGPT in public school facilities and networks for teachers and students or by deeming the use of ChatGPT as plagiarism. Others have developed softwares to analyze the nature of the text to detect whether its content is generated by artificial intelligence.

The emergence of ChatGPT has once again triggered anxiety about “technological unemployment” and “existential crisis” in all industries. In the discussion, analysts, journalists, programmers, teachers, lawyers, etc. have become “high-risk occupations” that may be replaced by ChatGPT.

John Maynard Keynes, a well-known economist, predicted that as society progressed, by the 21st-century humans would be more leisurely, needing to work only 15 hours a week. But instead, people are busier today, with technological advances pulling people’s needs and generating more opportunities, including jobs.

Plus, the regulation of artificial intelligence tools such as ChatGPT is a very important issue. Ethicists study what social impacts science and technology may lead to, and they usually do so with criticism and questioning, but artificial intelligence is an exception. It is generally accepted that AI technology should be subject to ethical and legal constraints.

New technologies are emerging, and ethics is more flexible compared with laws, and ethicists should propose a framework system for ethical governance, which is more applicable and has a wider scope and longer application time.

It is worth mentioning that in 2022, China released Opinions on Strengthening the Ethical Governance of Science and Technology and the Regulations on the Management of Deep Synthesis of Internet Information Services one after another. The Wall Street Journal recently noted that China, a pioneer in algorithmic regulation, has turned its attention to deep synthesis technology.

The implementation of new regulations on deep synthesis technology in China marks the first time a major global regulator has made a comprehensive attempt to limit this “most explosive and controversial” new artificial intelligence technology.

As the misuse of deep synthesis technology generates deep-fake content continues to grow globally. Graham Webster, a research scholar at Stanford University and head of the Digital China Project, said China is joining the world in understanding the potential impact of these things and is moving more quickly toward mandatory rules and enforcement.

Webster said the Chinese regulations will provide a case study for observers outside China to examine how such rules might work in the real world and how they might affect businesses. U.S. lawmakers have also tried to address the proliferation and potential abuse of deep-fake content, but those efforts have been stalled by so-called “free speech”. In addition, the proposed EU AI rules would address concerns about the risks of ChatGPT and AI technology, according to Reuters.

(Source: Forbes, Xtract, Statista)