On June 15, 2023, Baichuan Intelligence, known as China’s ChatGPT Dream Team, launched baichuan-7B, a 7 billion counts Chinese and English pre-trained large model.

Baichuan-7B not only outperforms other big models such as ChatGLM-6B in C-Eval, AGIEval, and Gaokao Chinese authoritative evaluation list by a significant margin but also leads LLaMA-7B in MMLU English authoritative evaluation list.

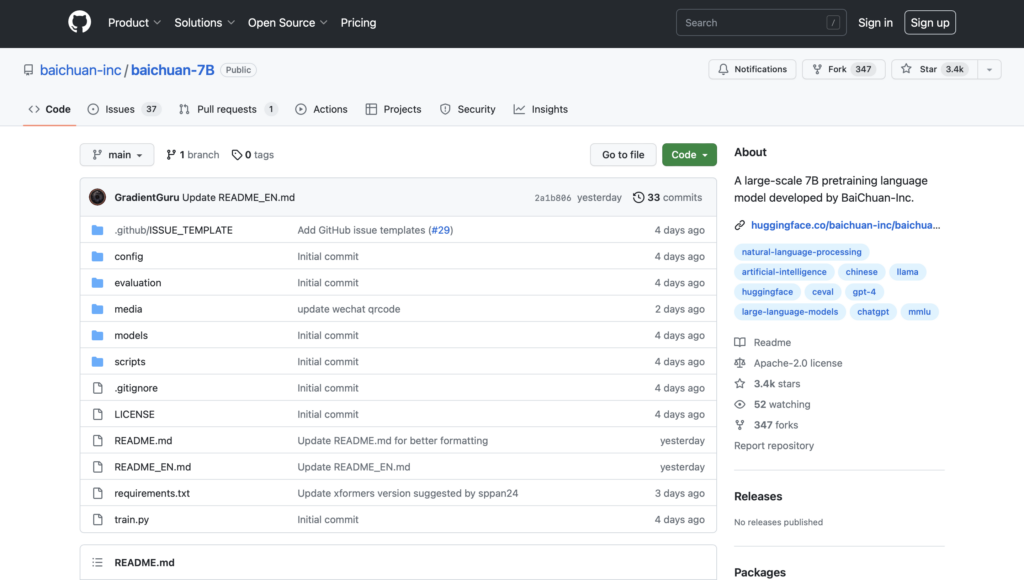

The baichuan-7B large model has been released on Hugging Face, Github, and Model Scope platforms to allow tests of global technical personnel.

Excellent Chinese and English evaluation benchmarks

To validate the model’s capabilities, baichuan-7B was evaluated in three of the most influential Chinese benchmarks, C-Eval, AGIEval, and Gaokao, and all received excellent results. The C-Eval benchmark was created by Shanghai Jiao Tong University, Tsinghua University, and Edinburgh University, covering 52 different industry fields.

The AGIEval benchmark is sponsored by Microsoft Research and aims to comprehensively assess the capabilities of basic models for human cognition and problem-solving related tasks, including 20 publicly available and rigorous official entrance and professional qualification tests, including the college entrance examination and judicial exams in China, and the SAT, LSAT, GRE, and GMAT in the United States.

The GAOKAO benchmark is a framework created by a team of researchers at Fudan University to test the performance of large models in Chinese language comprehension and logical reasoning using Chinese college entrance exam questions as the dataset.

The baichuan-7B not only performs well in Chinese but also in English. In the MMLU evaluation, baichuan-7B scored significantly ahead of the English open-source pre-training model LLaMA-7B.

MMLU was created by the University of California, Berkeley, and other leading universities, and brings together 57 subjects in the fields of science, engineering, mathematics, humanities, and social sciences, with the main goal of testing the model’s English interdisciplinary expertise in depth. Its extensive content covers from the elementary level to the advanced professional level.

The modeling of baichuan-7B

The training corpus is crucial to the training results of the larger model. In terms of building the pre-training corpus, Baichuan Intelligence uses a high-quality Chinese corpus as the basis, while incorporating high-quality English data. In terms of data quality, the data is scored by a quality model, and the original dataset is accurately screened at the chapter level and sentence level; in terms of content diversity, the data is clustered at multiple levels and granularity by using the self-developed super-large-scale locally sensitive hash clustering system and semantic clustering system, and finally, the pre-training data containing 1.2 trillion tokens is built with both quality and diversity. Compared with other open-source Chinese pre-training models of the same parameter scale, the data volume has increased by more than 50%.

Based on trillions of high-quality Chinese and English data, baichuan-7B deeply integrates model operators to speed up the computation process, and adaptively optimizes model parallelism and recomputation strategies for task load and cluster configuration in order to better improve training efficiency.

Through efficient training process scheduling communication, baichuan-7B successfully achieves efficient overlap of computation and communication, and thus achieves ultra-linear training acceleration with an industry-leading training throughput of 180+ Tflops on a thousand-card cluster.

Meanwhile, the window length of existing open-source models is within 2K, and for some long text modeling tasks, such as scenarios that require the introduction of external knowledge for search enhancement, a longer processing length helps the model capture more contextual information during the training and inference phases, and a 2K processing length is a major constraint. The open-source pre-training model opens a 4K context window, which makes the model more widely used.

In addition, baichuan-7B also deeply optimizes the model training process and adopts a more scientific and stable training process and hyperparameter selection, which makes the convergence speed of baichuan-7B model greatly improved. Compared with models of the same parameter size, baichuan-7B performs better in key performance indicators such as puzzlement level (PPL) and training loss.

Free and commercially available

In the spirit of open source, baichuan-7B code uses the Apache-2.0 protocol, and the model weights use the free commercial protocol, which is free for commercial use with a simple registration.

Baichuan-7B is rich in content, including inference code, INT4 quantization implementation, fine-tuning code, and weights for pre-trained models. Among them, the fine-tuning code is convenient for users to adjust and optimize the model; the inference code and INT4 quantization implementation help developers to deploy and apply the model at low cost; after the open-source of pre-trained model weights, users can directly use the pre-trained model for various experimental studies.

It is understood that two top universities, Peking University and Tsinghua University, have taken the lead in using baichuan-7B model to promote related research work, and plan to cooperate deeply with Baichuan Intelligence in the future to jointly promote the application and development of baichuan-7B model.

According to Yiqun Liu, professor of the Department of Computer Science and Technology, as well as director of the Institute for Internet Judiciary at Tsinghua University, the baichuan-7B model performs very well in Chinese, and its free commercial open-source approach shows an open attitude that not only contributes to the community but also promotes the development of the technology. The team plans to conduct related research in the field of judicial AI based on the baichuan-7B model.

Yang Yaodong, assistant professor at Institute for AI of Peking University, believes that the open-source of baichuan-7B model will give an important impetus to the ecological construction of Chinese basic language models and academic research, and he will continue to pay attention to the exploration of related fields and conduct further in-depth research on the security and alignment of Chinese large language models.

“The release of the open source model is the first milestone of Baichuan Intelligence 2 months after its establishment, and it is a good start for Baichuan Intelligence,” said Xiaochuan Wang, CEO of Baichuan Intelligence. The Baichuan-7B model not only adds to the AGI cause in China but also contributes to the world’s big model open-source community.

(Source: Baichuan)