At 14:42 on August 6, 2024, the Long March 6A rocket lifted off from the Taiyuan Satellite Launch Center, sending 18 Qianfan Polar Orbit 01 Group satellites to their designated orbit. The official press release described it in routine terms: a standard, successful launch with no anomalies. Yet in the context of China’s commercial space ambitions, this was anything but routine. It marked the moment when China’s long-discussed, often-debated, and frequently doubted low-Earth-orbit (LEO) broadband constellation plans moved from paper proposals and investment pitch decks into operational reality.

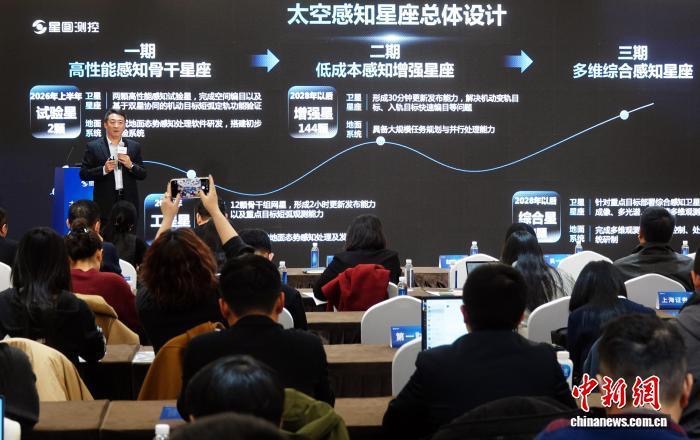

For years, China’s vision of a ten-thousand-satellite LEO internet constellation existed primarily in regulatory filings, venture capital presentations, and closed-door policy discussions. The Qianfan (G60) constellation, alongside the state-backed China Satellite Network (GW) network, represented an ambition comparable in scale to SpaceX’s Starlink. But ambition alone does not secure orbital position, spectrum rights, or industrial capability. What changed in 2024 was not simply technical readiness, but the alignment of regulatory deadlines, manufacturing capacity, launch economics, state procurement logic, and geopolitical pressure. Between 2024 and 2026, these forces converged to create a narrow strategic window that China could not afford to miss.

The most immediate driver is institutional rather than technological: the rules of the International Telecommunication Union (ITU). Contrary to popular perception, low Earth orbit is not an infinite commons. Orbital shells suitable for broadband constellations, along with associated radio frequency bands, are scarce and governed by a “first-filed, first-brought-into-use” regime. Once a country files a constellation plan, it must activate the assigned frequencies within seven years, deploy 10 percent of satellites within nine years, 50 percent within twelve, and complete deployment within fourteen. Failure to meet these milestones risks forfeiting spectrum and orbital priority to later applicants.

China’s major LEO filings were concentrated around 2020 and 2021. This places 2027 as a critical activation deadline. Working backward, large-scale deployment must accelerate by 2026; industrial validation must be completed by 2024–2025. The August 2024 launch was therefore not symbolic. It was a procedural necessity. Without near-term in-orbit validation and initial network activation, the country risks losing hard-won spectrum claims in increasingly contested Ku, Ka, and emerging Q/V bands. What appears to be commercial competition is, at root, a regulatory race against time.

The second structural shift lies in industrialization. Previous generations of satellite programs—both in China and globally—were constrained by artisanal production models. Satellites were treated as bespoke, high-cost assets, often costing hundreds of millions of dollars and requiring months or years of assembly. That economic model proved incompatible with large constellations, as demonstrated by the bankruptcy of early efforts such as the original Iridium in the 1990s.

The current wave differs because satellite manufacturing is being reconfigured along consumer electronics logic. Facilities such as Shanghai’s G60 digital satellite factory operate pulse production lines in which standardized satellite buses move between modular workstations. Greater use of commercial off-the-shelf components, combined with software-based redundancy architectures, has dramatically reduced unit cost. Instead of relying exclusively on radiation-hardened aerospace chips, manufacturers increasingly employ industrial- or automotive-grade components with redundant system design to maintain reliability at lower expense. Production cycles have compressed to the point where satellites can be completed in days rather than months, and unit costs have fallen by an order of magnitude compared to traditional platforms. Only under such conditions can a constellation numbering in the tens of thousands be financially viable.

Launch economics represent the third decisive variable. Satellite cost reductions alone are insufficient if launch prices remain prohibitive. China’s commercial launch sector has therefore shifted focus from small solid-fuel rockets toward large, liquid-fueled vehicles designed for higher payload capacity and eventual reusability. The technological maturation of liquid oxygen–methane engines, stainless-steel structures, additive manufacturing for engine components, and vertical takeoff and landing (VTVL) recovery experiments indicates that domestic firms are converging on the same cost-disruption logic that enabled Falcon 9’s dominance.

Importantly, the significance of recent high-profile test anomalies lies less in short-term success or failure than in scale and ambition. Companies are now attempting full-system tests of vehicles in the 3–4 meter diameter class with hundreds of tons of thrust—direct competitors to medium-lift reusable rockets globally. As engine performance stabilizes and recovery algorithms improve, per-kilogram launch costs are expected to fall substantially. Once liquid reusable rockets achieve reliable operational cadence around 2025–2026, constellation deployment can transition from demonstration to mass production tempo.

A fourth transformation concerns the role of the state. Historically, China’s space sector operated under a cost-plus procurement model in which the government was designer, funder, operator, and end user. That structure discouraged cost discipline and limited private-sector participation in subcontracting roles. The emerging model shifts the state from sole operator to anchor customer. Rather than purchasing rockets and satellites as hardware, government entities increasingly purchase launch services and data services. This approach mirrors NASA’s Commercial Orbital Transportation Services (COTS) program, which catalyzed SpaceX’s early growth by guaranteeing demand rather than micromanaging development.

Institutional changes reinforce this shift. The construction of the Hainan Commercial Space Launch Site, including dedicated pads designed for private liquid rockets, reduces bottlenecks associated with sharing state-operated launch facilities. Policy documents outlining commercial space development for 2025–2027 formally classify the sector as part of “new quality productive forces,” signaling sustained political backing and long-term integration into national industrial strategy. In parallel, state-affiliated capital has entered the sector more systematically, providing longer investment horizons than traditional venture capital cycles.

Overlaying these domestic dynamics is the external pressure exerted by SpaceX. With thousands of Starlink satellites already in orbit and an ultimate target of tens of thousands, the scale differential is stark. Beyond civilian broadband, the operational performance of distributed LEO networks in conflict scenarios has demonstrated their resilience, low latency, and strategic utility. The prospect of Starshield and the continued expansion of vertically integrated launch and satellite manufacturing create a form of structural pressure: if one actor achieves overwhelming presence in key orbital shells, late entrants face both regulatory and physical crowding constraints.

The development of Starship amplifies this pressure. Should fully reusable heavy-lift vehicles achieve routine operations, deployment capacity could increase by an order of magnitude. In such a scenario, orbital real estate may be populated at unprecedented speed, raising both competitive and debris-management implications. For China, delaying large-scale deployment risks entering a future market in which the most valuable shells and frequencies are already saturated.

Taken together, the 2024–2026 period represents less a moment of entrepreneurial exuberance than a compressed strategic cycle. ITU deadlines impose a fixed timetable; industrial upgrading lowers cost thresholds; launch technology approaches economic viability; state procurement logic shifts to service-based support; and geopolitical competition eliminates the option of gradualism. The August 2024 launch thus signaled not merely technical progress but the beginning of a sustained acceleration phase in China.

The outcome remains uncertain. Successfully deploying and operating a large-scale constellation would grant China independent broadband infrastructure, strengthen its bargaining position in global spectrum governance, and anchor a new segment of the digital economy in orbit. Failure to scale in time could result in lost filings, constrained orbital access, and structural disadvantage in future space-based communications markets.

The countdown is not rhetorical. It is embedded in international regulation, industrial investment cycles, and competitive launch manifests. Whether the current window produces a durable presence in low Earth orbit will depend on execution over the next several years, not on a single launch—but that launch marked the point at which deferral was no longer an option.

Source: cnsa gov cn, xinhua, microstate cas